Next: Introduction, Up: (dir) [Contents][Index]

GNU Guix

This document describes GNU Guix version ce086e3, a functional package management tool written for the GNU system.

This manual is also available in Simplified Chinese (see GNU Guix参考手册), French (see Manuel de référence de GNU Guix), German (see Referenzhandbuch zu GNU Guix), Spanish (see Manual de referencia de GNU Guix), Brazilian Portuguese (see Manual de referência do GNU Guix), and Russian (see Руководство GNU Guix). If you would like to translate it in your native language, consider joining Weblate (see Translating Guix).

Table of Contents

- 1 Introduction

- 2 Installation

- 3 System Installation

- 3.1 Limitations

- 3.2 Hardware Considerations

- 3.3 USB Stick and DVD Installation

- 3.4 Preparing for Installation

- 3.5 Guided Graphical Installation

- 3.6 Manual Installation

- 3.7 After System Installation

- 3.8 Installing Guix in a Virtual Machine

- 3.9 Building the Installation Image

- 3.10 Building the Installation Image for ARM Boards

- 4 Getting Started

- 5 Package Management

- 6 Channels

- 6.1 Specifying Additional Channels

- 6.2 Using a Custom Guix Channel

- 6.3 Replicating Guix

- 6.4 Customizing the System-Wide Guix

- 6.5 Channel Authentication

- 6.6 Channels with Substitutes

- 6.7 Creating a Channel

- 6.8 Package Modules in a Sub-directory

- 6.9 Declaring Channel Dependencies

- 6.10 Specifying Channel Authorizations

- 6.11 Primary URL

- 6.12 Writing Channel News

- 7 Development

- 8 Programming Interface

- 9 Utilities

- 9.1 Invoking

guix build - 9.2 Invoking

guix edit - 9.3 Invoking

guix download - 9.4 Invoking

guix hash - 9.5 Invoking

guix import - 9.6 Invoking

guix refresh - 9.7 Invoking

guix style - 9.8 Invoking

guix lint - 9.9 Invoking

guix size - 9.10 Invoking

guix graph - 9.11 Invoking

guix publish - 9.12 Invoking

guix challenge - 9.13 Invoking

guix copy - 9.14 Invoking

guix container - 9.15 Invoking

guix weather - 9.16 Invoking

guix processes

- 9.1 Invoking

- 10 Foreign Architectures

- 11 System Configuration

- 11.1 Getting Started

- 11.2 Using the Configuration System

- 11.3

operating-systemReference - 11.4 File Systems

- 11.5 Mapped Devices

- 11.6 Swap Space

- 11.7 User Accounts

- 11.8 Keyboard Layout

- 11.9 Locales

- 11.10 Services

- 11.10.1 Base Services

- 11.10.2 Scheduled Job Execution

- 11.10.3 Log Rotation

- 11.10.4 Networking Setup

- 11.10.5 Networking Services

- 11.10.6 Unattended Upgrades

- 11.10.7 X Window

- 11.10.8 Printing Services

- 11.10.9 Desktop Services

- 11.10.10 Sound Services

- 11.10.11 File Search Services

- 11.10.12 Database Services

- 11.10.13 Mail Services

- 11.10.14 Messaging Services

- 11.10.15 Telephony Services

- 11.10.16 File-Sharing Services

- 11.10.17 Monitoring Services

- 11.10.18 Kerberos Services

- 11.10.19 LDAP Services

- 11.10.20 Web Services

- 11.10.21 Certificate Services

- 11.10.22 DNS Services

- 11.10.23 VNC Services

- 11.10.24 VPN Services

- 11.10.25 Network File System

- 11.10.26 Samba Services

- 11.10.27 Continuous Integration

- 11.10.28 Power Management Services

- 11.10.29 Audio Services

- 11.10.30 Virtualization Services

- 11.10.31 Version Control Services

- 11.10.32 Game Services

- 11.10.33 PAM Mount Service

- 11.10.34 Guix Services

- 11.10.35 Linux Services

- 11.10.36 Hurd Services

- 11.10.37 Miscellaneous Services

- 11.11 Privileged Programs

- 11.12 X.509 Certificates

- 11.13 Name Service Switch

- 11.14 Initial RAM Disk

- 11.15 Bootloader Configuration

- 11.16 Invoking

guix system - 11.17 Invoking

guix deploy - 11.18 Running Guix in a Virtual Machine

- 11.19 Defining Services

- 12 System Troubleshooting Tips

- 13 Home Configuration

- 13.1 Declaring the Home Environment

- 13.2 Configuring the Shell

- 13.3 Home Services

- 13.3.1 Essential Home Services

- 13.3.2 Shells

- 13.3.3 Scheduled User’s Job Execution

- 13.3.4 Power Management Home Services

- 13.3.5 Managing User Daemons

- 13.3.6 Secure Shell

- 13.3.7 GNU Privacy Guard

- 13.3.8 Desktop Home Services

- 13.3.9 Guix Home Services

- 13.3.10 Fonts Home Services

- 13.3.11 Sound Home Services

- 13.3.12 Mail Home Services

- 13.3.13 Messaging Home Services

- 13.3.14 Media Home Services

- 13.3.15 Sway window manager

- 13.3.16 Networking Home Services

- 13.3.17 Miscellaneous Home Services

- 13.4 Invoking

guix home

- 14 Documentation

- 15 Platforms

- 16 Creating System Images

- 17 Installing Debugging Files

- 18 Using TeX and LaTeX

- 19 Security Updates

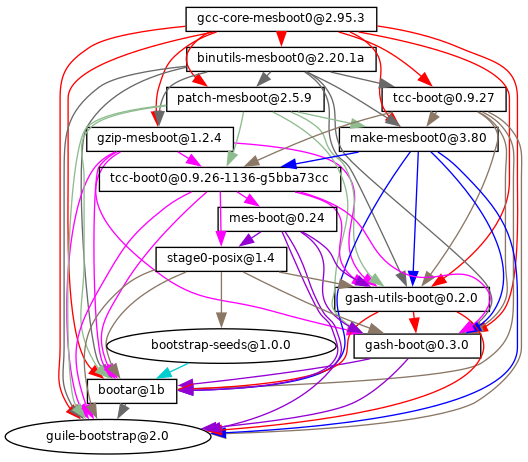

- 20 Bootstrapping

- 21 Porting to a New Platform

- 22 Contributing

- 22.1 Requirements

- 22.2 Building from Git

- 22.3 Running the Test Suite

- 22.4 Running Guix Before It Is Installed

- 22.5 The Perfect Setup

- 22.6 Alternative Setups

- 22.7 Source Tree Structure

- 22.8 Packaging Guidelines

- 22.8.1 Software Freedom

- 22.8.2 Package Naming

- 22.8.3 Version Numbers

- 22.8.4 Synopses and Descriptions

- 22.8.5 Snippets versus Phases

- 22.8.6 Cyclic Module Dependencies

- 22.8.7 Emacs Packages

- 22.8.8 Python Modules

- 22.8.9 Perl Modules

- 22.8.10 Java Packages

- 22.8.11 Rust Crates

- 22.8.12 Elm Packages

- 22.8.13 Fonts

- 22.9 Coding Style

- 22.10 Submitting Patches

- 22.11 Tracking Bugs and Changes

- 22.12 Teams

- 22.13 Making Decisions

- 22.14 Commit Access

- 22.15 Reviewing the Work of Others

- 22.16 Updating the Guix Package

- 22.17 Deprecation Policy

- 22.18 Writing Documentation

- 22.19 Translating Guix

- 22.20 Contributing to Guix’s Infrastructure

- 23 Acknowledgments

- Appendix A GNU Free Documentation License

- Concept Index

- Programming Index

Next: Installation, Previous: GNU Guix, Up: GNU Guix [Contents][Index]

1 Introduction

GNU Guix1 is a package management tool for and distribution of the GNU system. Guix makes it easy for unprivileged users to install, upgrade, or remove software packages, to roll back to a previous package set, to build packages from source, and generally assists with the creation and maintenance of software environments.

You can install GNU Guix on top of an existing GNU/Linux system where it complements the available tools without interference (see Installation), or you can use it as a standalone operating system distribution, Guix System2. See GNU Distribution.

Next: GNU Distribution, Up: Introduction [Contents][Index]

1.1 Managing Software the Guix Way

Guix provides a command-line package management interface (see Package Management), tools to help with software development (see Development), command-line utilities for more advanced usage (see Utilities), as well as Scheme programming interfaces (see Programming Interface). Its build daemon is responsible for building packages on behalf of users (see Setting Up the Daemon) and for downloading pre-built binaries from authorized sources (see Substitutes).

Guix includes package definitions for many GNU and non-GNU packages, all of which respect the user’s computing freedom. It is extensible: users can write their own package definitions (see Defining Packages) and make them available as independent package modules (see Package Modules). It is also customizable: users can derive specialized package definitions from existing ones, including from the command line (see Package Transformation Options).

Under the hood, Guix implements the functional package management discipline pioneered by Nix (see Acknowledgments). In Guix, the package build and installation process is seen as a function, in the mathematical sense. That function takes inputs, such as build scripts, a compiler, and libraries, and returns an installed package. As a pure function, its result depends solely on its inputs—for instance, it cannot refer to software or scripts that were not explicitly passed as inputs. A build function always produces the same result when passed a given set of inputs. It cannot alter the environment of the running system in any way; for instance, it cannot create, modify, or delete files outside of its build and installation directories. This is achieved by running build processes in isolated environments (or containers), where only their explicit inputs are visible.

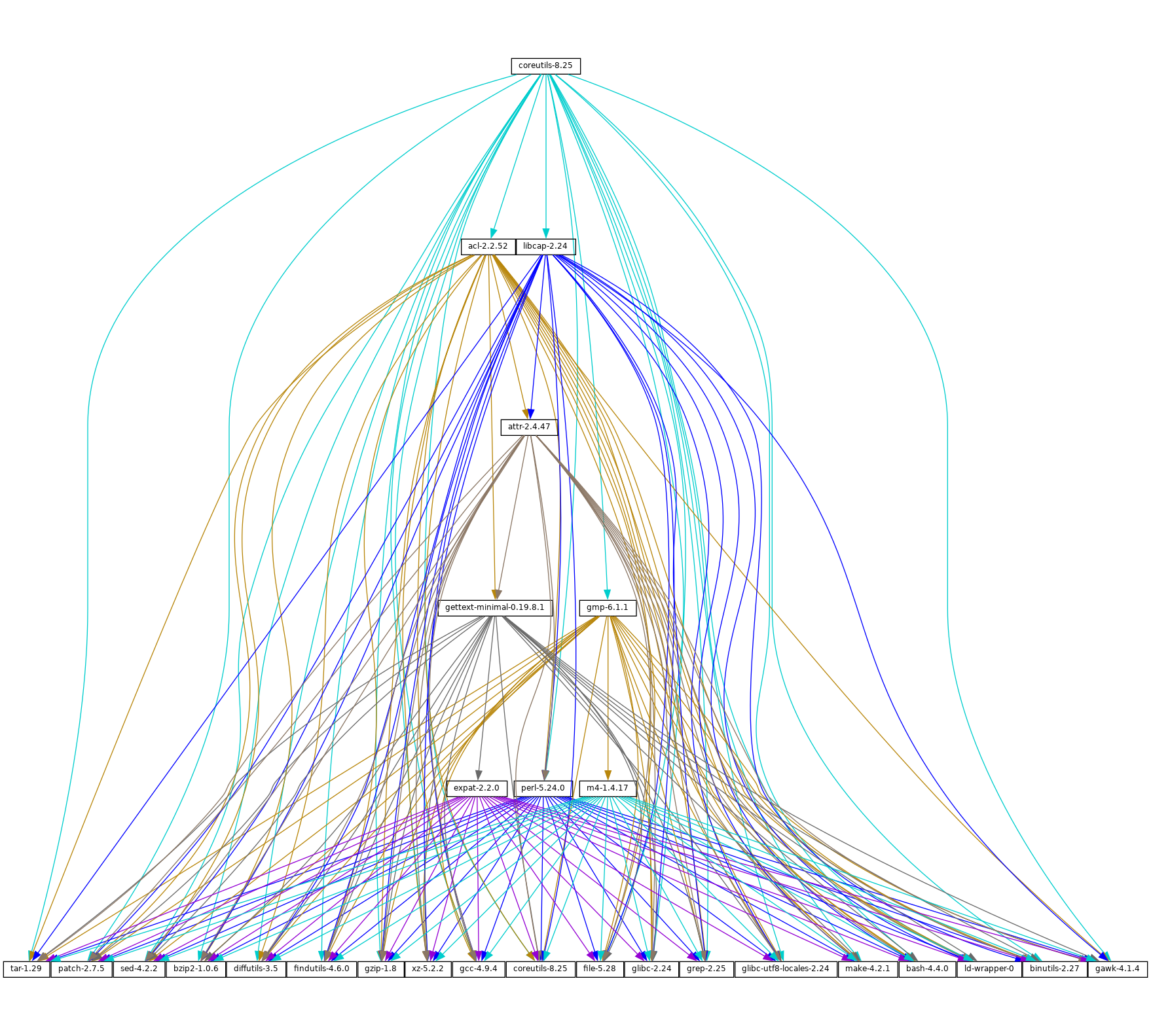

The result of package build functions is cached in the file system, in a special directory called the store (see The Store). Each package is installed in a directory of its own in the store—by default under /gnu/store. The directory name contains a hash of all the inputs used to build that package; thus, changing an input yields a different directory name.

This approach is the foundation for the salient features of Guix: support for transactional package upgrade and rollback, per-user installation, and garbage collection of packages (see Features).

Previous: Managing Software the Guix Way, Up: Introduction [Contents][Index]

1.2 GNU Distribution

Guix comes with a distribution of the GNU system consisting entirely of free software3. The distribution can be installed on its own (see System Installation), but it is also possible to install Guix as a package manager on top of an installed GNU/Linux system (see Installation). When we need to distinguish between the two, we refer to the standalone distribution as Guix System.

The distribution provides core GNU packages such as GNU libc, GCC, and

Binutils, as well as many GNU and non-GNU applications. The complete

list of available packages can be browsed

on-line or by

running guix package (see Invoking guix package):

guix package --list-available

Our goal is to provide a practical 100% free software distribution of Linux-based and other variants of GNU, with a focus on the promotion and tight integration of GNU components, and an emphasis on programs and tools that help users exert that freedom.

Packages are currently available on the following platforms:

x86_64-linuxIntel/AMD

x86_64architecture, Linux-Libre kernel.i686-linuxIntel 32-bit architecture (IA32), Linux-Libre kernel.

armhf-linuxARMv7-A architecture with hard float, Thumb-2 and NEON, using the EABI hard-float application binary interface (ABI), and Linux-Libre kernel.

aarch64-linuxlittle-endian 64-bit ARMv8-A processors, Linux-Libre kernel.

i586-gnuGNU/Hurd on the Intel 32-bit architecture (IA32).

This configuration is experimental and under development. The easiest way for you to give it a try is by setting up an instance of

hurd-vm-service-typeon your GNU/Linux machine (seehurd-vm-service-type). See Contributing, on how to help!x86_64-gnuGNU/Hurd on the

x86_64Intel/AMD 64-bit architecture.This configuration is even more experimental and under heavy upstream development.

mips64el-linux (unsupported)little-endian 64-bit MIPS processors, specifically the Loongson series, n32 ABI, and Linux-Libre kernel. This configuration is no longer fully supported; in particular, there is no ongoing work to ensure that this architecture still works. Should someone decide they wish to revive this architecture then the code is still available.

powerpc-linux (unsupported)big-endian 32-bit PowerPC processors, specifically the PowerPC G4 with AltiVec support, and Linux-Libre kernel. This configuration is not fully supported and there is no ongoing work to ensure this architecture works.

powerpc64le-linuxlittle-endian 64-bit Power ISA processors, Linux-Libre kernel. This includes POWER9 systems such as the RYF Talos II mainboard. This platform is available as a "technology preview": although it is supported, substitutes are not yet available from the build farm (see Substitutes), and some packages may fail to build (see Tracking Bugs and Changes). That said, the Guix community is actively working on improving this support, and now is a great time to try it and get involved!

riscv64-linuxlittle-endian 64-bit RISC-V processors, specifically RV64GC, and Linux-Libre kernel. This platform is available as a "technology preview": although it is supported, substitutes are not yet available from the build farm (see Substitutes), and some packages may fail to build (see Tracking Bugs and Changes). That said, the Guix community is actively working on improving this support, and now is a great time to try it and get involved!

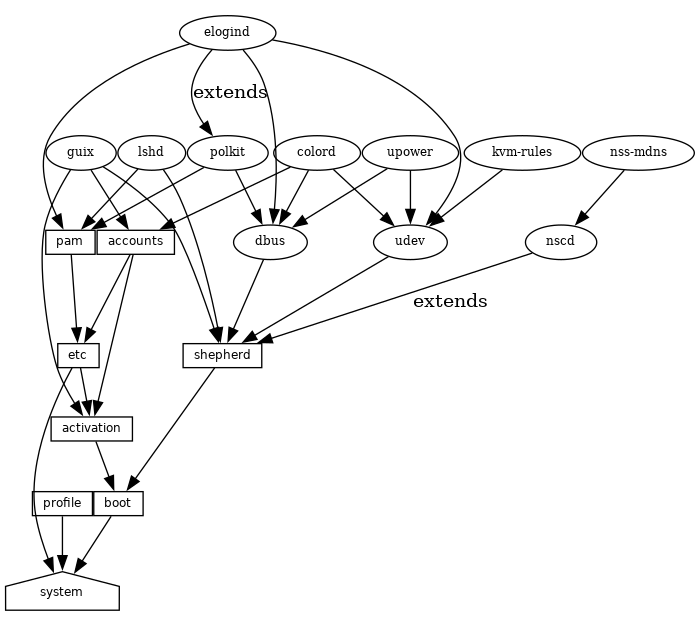

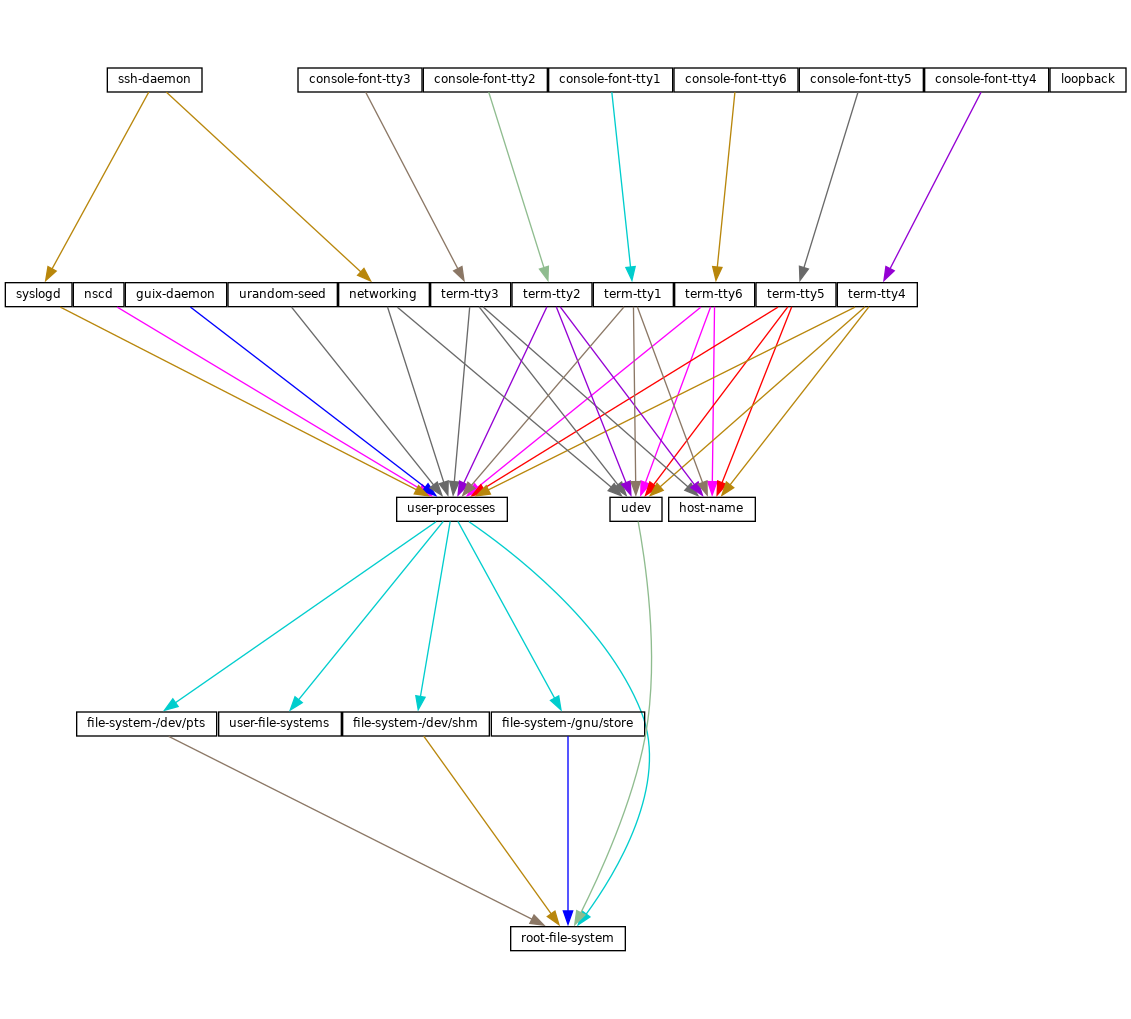

With Guix System, you declare all aspects of the operating system configuration and Guix takes care of instantiating the configuration in a transactional, reproducible, and stateless fashion (see System Configuration). Guix System uses the Linux-libre kernel, the Shepherd initialization system (see Introduction in The GNU Shepherd Manual), the well-known GNU utilities and tool chain, as well as the graphical environment or system services of your choice.

Guix System is available on all the above platforms except

mips64el-linux, powerpc-linux, powerpc64le-linux and

riscv64-linux.

For information on porting to other architectures or kernels, see Porting to a New Platform.

Building this distribution is a cooperative effort, and you are invited to join! See Contributing, for information about how you can help.

Next: System Installation, Previous: Introduction, Up: GNU Guix [Contents][Index]

2 Installation

You can install the package management tool Guix on top of an existing GNU/Linux or GNU/Hurd system4, referred to as a foreign distro. If, instead, you want to install the complete, standalone GNU system distribution, Guix System, see System Installation. This section is concerned only with the installation of Guix on a foreign distro.

Important: This section only applies to systems without Guix. Following it for existing Guix installations will overwrite important system files.

When installed on a foreign distro, GNU Guix complements the available tools without interference. Its data lives exclusively in two directories, usually /gnu/store and /var/guix; other files on your system, such as /etc, are left untouched.

Once installed, Guix can be updated by running guix pull

(see Invoking guix pull).

Next: Setting Up the Daemon, Up: Installation [Contents][Index]

2.1 Binary Installation

This section describes how to install Guix from a self-contained tarball providing binaries for Guix and for all its dependencies. This is often quicker than installing from source, described later (see Building from Git).

Important: This section only applies to systems without Guix. Following it for existing Guix installations will overwrite important system files.

Some GNU/Linux distributions, such as Debian, Ubuntu, and openSUSE provide Guix through their own package managers. The version of Guix may be older than ce086e3 but you can update it afterwards by running ‘guix pull’.

We advise system administrators who install Guix, both from the

installation script or via the native package manager of their

foreign distribution, to also regularly read and follow security

notices, as shown by guix pull.

For Debian or derivatives such as Ubuntu or Trisquel, call:

sudo apt install guix

Likewise, on openSUSE:

sudo zypper install guix

If you are running Parabola, after enabling the pcr (Parabola Community Repo) repository, you can install Guix with:

sudo pacman -S guix

The Guix project also provides a shell script, guix-install.sh, which automates the binary installation process without use of a foreign distro package manager5. Use of guix-install.sh requires Bash, GnuPG, GNU tar, wget, and Xz.

The script guides you through the following:

- Downloading and extracting the binary tarball

- Setting up the build daemon

- Making the ‘guix’ command available to non-root users

- Configuring substitute servers

As root, run:

# cd /tmp # wget https://git.savannah.gnu.org/cgit/guix.git/plain/etc/guix-install.sh # chmod +x guix-install.sh # ./guix-install.sh

The script to install Guix is also packaged in Parabola (in the pcr repository). You can install and run it with:

sudo pacman -S guix-installer sudo guix-install.sh

Note: By default, guix-install.sh will configure Guix to download pre-built package binaries, called substitutes (see Substitutes), from the project’s build farms. If you choose not to permit this, Guix will build everything from source, making each installation and upgrade very expensive. See On Trusting Binaries for a discussion of why you may want to build packages from source.

To use substitutes from

bordeaux.guix.gnu.org,ci.guix.gnu.orgor a mirror, you must authorize them. For example,# guix archive --authorize < \ ~root/.config/guix/current/share/guix/bordeaux.guix.gnu.org.pub # guix archive --authorize < \ ~root/.config/guix/current/share/guix/ci.guix.gnu.org.pub

When you’re done installing Guix, see Application Setup for extra configuration you might need, and Getting Started for your first steps!

Note: The binary installation tarball can be (re)produced and verified simply by running the following command in the Guix source tree:

make guix-binary.system.tar.xz... which, in turn, runs:

guix pack -s system --localstatedir \ --profile-name=current-guix guixSee Invoking

guix pack, for more info on this handy tool.

Should you eventually want to uninstall Guix, run the same script with the --uninstall flag:

./guix-install.sh --uninstall

With --uninstall, the script irreversibly deletes all the Guix files, configuration, and services.

Next: Invoking guix-daemon, Previous: Binary Installation, Up: Installation [Contents][Index]

2.2 Setting Up the Daemon

During installation, the build daemon that must be running

to use Guix has already been set up and you can run guix

commands in your terminal program, see Getting Started:

guix build hello

If this runs through without error, feel free to skip this section. You should continue with the following section, Application Setup.

However, now would be a good time to replace outdated daemon versions,

tweak it, perform builds on other machines (see Using the Offload Facility), or start it manually in special environments like “chroots”

(see Chrooting into an existing system) or WSL (not needed for WSL

images created with Guix, see wsl2-image-type). If you want to know more or optimize your

system, this section is worth reading.

Operations such as building a package or running the garbage collector

are all performed by a specialized process, the build daemon, on

behalf of clients. Only the daemon may access the store and its

associated database. Thus, any operation that manipulates the store

goes through the daemon. For instance, command-line tools such as

guix package and guix build communicate with the

daemon (via remote procedure calls) to instruct it what to do.

The following sections explain how to prepare the build daemon’s environment. See Substitutes for how to allow the daemon to download pre-built binaries.

Next: Using the Offload Facility, Up: Setting Up the Daemon [Contents][Index]

2.2.1 Build Environment Setup

In a standard multi-user setup, Guix and its daemon—the

guix-daemon program—are installed by the system

administrator; /gnu/store is owned by root and

guix-daemon runs as root. Unprivileged users may use

Guix tools to build packages or otherwise access the store, and the

daemon will do it on their behalf, ensuring that the store is kept in a

consistent state, and allowing built packages to be shared among users.

When guix-daemon runs as root, you may not want package

build processes themselves to run as root too, for obvious

security reasons. To avoid that, a special pool of build users

should be created for use by build processes started by the daemon.

These build users need not have a shell and a home directory: they will

just be used when the daemon drops root privileges in build

processes. Having several such users allows the daemon to launch

distinct build processes under separate UIDs, which guarantees that they

do not interfere with each other—an essential feature since builds are

regarded as pure functions (see Introduction).

On a GNU/Linux system, a build user pool may be created like this (using

Bash syntax and the shadow commands):

# groupadd --system guixbuild

# for i in $(seq -w 1 10);

do

useradd -g guixbuild -G guixbuild \

-d /var/empty -s $(which nologin) \

-c "Guix build user $i" --system \

guixbuilder$i;

done

The number of build users determines how many build jobs may run in

parallel, as specified by the --max-jobs option

(see --max-jobs). To use

guix system vm and related commands, you may need to add the

build users to the kvm group so they can access /dev/kvm,

using -G guixbuild,kvm instead of -G guixbuild

(see Invoking guix system).

The guix-daemon program may then be run as root with the

following command6:

# guix-daemon --build-users-group=guixbuild

This way, the daemon starts build processes in a chroot, under one of

the guixbuilder users. On GNU/Linux, by default, the chroot

environment contains nothing but:

- a minimal

/devdirectory, created mostly independently from the host/dev7; - the

/procdirectory; it only shows the processes of the container since a separate PID name space is used; - /etc/passwd with an entry for the current user and an entry for user nobody;

- /etc/group with an entry for the user’s group;

- /etc/hosts with an entry that maps

localhostto127.0.0.1; - a writable /tmp directory.

The chroot does not contain a /home directory, and the HOME

environment variable is set to the non-existent

/homeless-shelter. This helps to highlight inappropriate uses of

HOME in the build scripts of packages.

All this usually enough to ensure details of the environment do not influence build processes. In some exceptional cases where more control is needed—typically over the date, kernel, or CPU—you can resort to a virtual build machine (see virtual build machines).

You can influence the directory where the daemon stores build trees

via the TMPDIR environment variable. However, the build tree

within the chroot is always called /tmp/guix-build-name.drv-0,

where name is the derivation name—e.g., coreutils-8.24.

This way, the value of TMPDIR does not leak inside build

environments, which avoids discrepancies in cases where build processes

capture the name of their build tree.

The daemon also honors the http_proxy and https_proxy

environment variables for HTTP and HTTPS downloads it performs, be it

for fixed-output derivations (see Derivations) or for substitutes

(see Substitutes).

If you are installing Guix as an unprivileged user, it is still possible

to run guix-daemon provided you pass --disable-chroot.

However, build processes will not be isolated from one another, and not

from the rest of the system. Thus, build processes may interfere with

each other, and may access programs, libraries, and other files

available on the system—making it much harder to view them as

pure functions.

Next: SELinux Support, Previous: Build Environment Setup, Up: Setting Up the Daemon [Contents][Index]

2.2.2 Using the Offload Facility

When desired, the build daemon can offload derivation builds to

other machines running Guix, using the offload build

hook8. When that feature is enabled, a list of user-specified build

machines is read from /etc/guix/machines.scm; every time a build

is requested, for instance via guix build, the daemon attempts to

offload it to one of the machines that satisfy the constraints of the

derivation, in particular its system types—e.g., x86_64-linux.

A single machine can have multiple system types, either because its

architecture natively supports it, via emulation

(see Transparent Emulation with QEMU),

or both. Missing prerequisites for the build are

copied over SSH to the target machine, which then proceeds with the

build; upon success the output(s) of the build are copied back to the

initial machine. The offload facility comes with a basic scheduler that

attempts to select the best machine. The best machine is chosen among

the available machines based on criteria such as:

- The availability of a build slot. A build machine can have as many

build slots (connections) as the value of the

parallel-buildsfield of itsbuild-machineobject. - Its relative speed, as defined via the

speedfield of itsbuild-machineobject. - Its load. The normalized machine load must be lower than a threshold

value, configurable via the

overload-thresholdfield of itsbuild-machineobject. - Disk space availability. More than a 100 MiB must be available.

The /etc/guix/machines.scm file typically looks like this:

(list (build-machine

(name "eightysix.example.org")

(systems (list "x86_64-linux" "i686-linux"))

(host-key "ssh-ed25519 AAAAC3Nza…")

(user "bob")

(speed 2.)) ;incredibly fast!

(build-machine

(name "armeight.example.org")

(systems (list "aarch64-linux"))

(host-key "ssh-rsa AAAAB3Nza…")

(user "alice")

;; Remember 'guix offload' is spawned by

;; 'guix-daemon' as root.

(private-key "/root/.ssh/identity-for-guix")))

In the example above we specify a list of two build machines, one for

the x86_64 and i686 architectures and one for the

aarch64 architecture.

In fact, this file is—not surprisingly!—a Scheme file that is

evaluated when the offload hook is started. Its return value

must be a list of build-machine objects. While this example

shows a fixed list of build machines, one could imagine, say, using

DNS-SD to return a list of potential build machines discovered in the

local network (see Guile-Avahi in Using

Avahi in Guile Scheme Programs). The build-machine data type is

detailed below.

- Data Type: build-machine

This data type represents build machines to which the daemon may offload builds. The important fields are:

nameThe host name of the remote machine.

systemsThe system types the remote machine supports—e.g.,

(list "x86_64-linux" "i686-linux").userThe user account on the remote machine to use when connecting over SSH. Note that the SSH key pair must not be passphrase-protected, to allow non-interactive logins.

host-keyThis must be the machine’s SSH public host key in OpenSSH format. This is used to authenticate the machine when we connect to it. It is a long string that looks like this:

ssh-ed25519 AAAAC3NzaC…mde+UhL hint@example.org

If the machine is running the OpenSSH daemon,

sshd, the host key can be found in a file such as /etc/ssh/ssh_host_ed25519_key.pub.If the machine is running the SSH daemon of GNU lsh,

lshd, the host key is in /etc/lsh/host-key.pub or a similar file. It can be converted to the OpenSSH format usinglsh-export-key(see Converting keys in LSH Manual):$ lsh-export-key --openssh < /etc/lsh/host-key.pub ssh-rsa AAAAB3NzaC1yc2EAAAAEOp8FoQAAAQEAs1eB46LV…

A number of optional fields may be specified:

port(default:22)Port number of SSH server on the machine.

private-key(default: ~root/.ssh/id_rsa)The SSH private key file to use when connecting to the machine, in OpenSSH format. This key must not be protected with a passphrase.

Note that the default value is the private key of the root account. Make sure it exists if you use the default.

compression(default:"zlib@openssh.com,zlib")compression-level(default:3)The SSH-level compression methods and compression level requested.

Note that offloading relies on SSH compression to reduce bandwidth usage when transferring files to and from build machines.

daemon-socket(default:"/var/guix/daemon-socket/socket")File name of the Unix-domain socket

guix-daemonis listening to on that machine.overload-threshold(default:0.8)The load threshold above which a potential offload machine is disregarded by the offload scheduler. The value roughly translates to the total processor usage of the build machine, ranging from 0.0 (0%) to 1.0 (100%). It can also be disabled by setting

overload-thresholdto#f.parallel-builds(default:1)The number of builds that may run in parallel on the machine.

speed(default:1.0)A “relative speed factor”. The offload scheduler will tend to prefer machines with a higher speed factor.

features(default:'())A list of strings denoting specific features supported by the machine. An example is

"kvm"for machines that have the KVM Linux modules and corresponding hardware support. Derivations can request features by name, and they will be scheduled on matching build machines.

Note: On Guix System, instead of managing /etc/guix/machines.scm independently, you can choose to specify build machines directly in the

operating-systemdeclaration, in thebuild-machinesfield ofguix-configuration. Seebuild-machinesfield ofguix-configuration.

The guix command must be in the search path on the build

machines. You can check whether this is the case by running:

ssh build-machine guix repl --version

There is one last thing to do once machines.scm is in place. As

explained above, when offloading, files are transferred back and forth

between the machine stores. For this to work, you first need to

generate a key pair on each machine to allow the daemon to export signed

archives of files from the store (see Invoking guix archive):

# guix archive --generate-key

Note: This key pair is not related to the SSH key pair that was previously mentioned in the description of the

build-machinedata type.

Each build machine must authorize the key of the master machine so that it accepts store items it receives from the master:

# guix archive --authorize < master-public-key.txt

Likewise, the master machine must authorize the key of each build machine.

All the fuss with keys is here to express pairwise mutual trust relations between the master and the build machines. Concretely, when the master receives files from a build machine (and vice versa), its build daemon can make sure they are genuine, have not been tampered with, and that they are signed by an authorized key.

To test whether your setup is operational, run this command on the master node:

# guix offload test

This will attempt to connect to each of the build machines specified in /etc/guix/machines.scm, make sure Guix is available on each machine, attempt to export to the machine and import from it, and report any error in the process.

If you want to test a different machine file, just specify it on the command line:

# guix offload test machines-qualif.scm

Last, you can test the subset of the machines whose name matches a regular expression like this:

# guix offload test machines.scm '\.gnu\.org$'

To display the current load of all build hosts, run this command on the main node:

# guix offload status

Previous: Using the Offload Facility, Up: Setting Up the Daemon [Contents][Index]

2.2.3 SELinux Support

Guix includes an SELinux policy file at etc/guix-daemon.cil that can be installed on a system where SELinux is enabled, in order to label Guix files and to specify the expected behavior of the daemon. Since Guix System does not provide an SELinux base policy, the daemon policy cannot be used on Guix System.

2.2.3.1 Installing the SELinux policy

Note: The

guix-install.shbinary installation script offers to perform the steps below for you (see Binary Installation).

To install the policy run this command as root:

semodule -i /var/guix/profiles/per-user/root/current-guix/share/selinux/guix-daemon.cil

Then, as root, relabel the file system, possibly after making it writable:

mount -o remount,rw /gnu/store restorecon -R /gnu /var/guix

At this point you can start or restart guix-daemon; on a

distribution that uses systemd as its service manager, you can do that

with:

systemctl restart guix-daemon

Once the policy is installed, the file system has been relabeled, and

the daemon has been restarted, it should be running in the

guix_daemon_t context. You can confirm this with the following

command:

ps -Zax | grep guix-daemon

Monitor the SELinux log files as you run a command like guix build

hello to convince yourself that SELinux permits all necessary

operations.

2.2.3.2 Limitations

This policy is not perfect. Here is a list of limitations or quirks that should be considered when deploying the provided SELinux policy for the Guix daemon.

-

guix_daemon_socket_tisn’t actually used. None of the socket operations involve contexts that have anything to do withguix_daemon_socket_t. It doesn’t hurt to have this unused label, but it would be preferable to define socket rules for only this label. -

guix gccannot access arbitrary links to profiles. By design, the file label of the destination of a symlink is independent of the file label of the link itself. Although all profiles under $localstatedir are labelled, the links to these profiles inherit the label of the directory they are in. For links in the user’s home directory this will beuser_home_t. But for links from the root user’s home directory, or /tmp, or the HTTP server’s working directory, etc, this won’t work.guix gcwould be prevented from reading and following these links. - The daemon’s feature to listen for TCP connections might no longer work. This might require extra rules, because SELinux treats network sockets differently from files.

- Currently all files with a name matching the regular expression

/gnu/store/.+-(guix-.+|profile)/bin/guix-daemonare assigned the labelguix_daemon_exec_t; this means that any file with that name in any profile would be permitted to run in theguix_daemon_tdomain. This is not ideal. An attacker could build a package that provides this executable and convince a user to install and run it, which lifts it into theguix_daemon_tdomain. At that point SELinux could not prevent it from accessing files that are allowed for processes in that domain.You will need to relabel the store directory after all upgrades to guix-daemon, such as after running

guix pull. Assuming the store is in /gnu, you can do this withrestorecon -vR /gnu, or by other means provided by your operating system.We could generate a much more restrictive policy at installation time, so that only the exact file name of the currently installed

guix-daemonexecutable would be labelled withguix_daemon_exec_t, instead of using a broad regular expression. The downside is that root would have to install or upgrade the policy at installation time whenever the Guix package that provides the effectively runningguix-daemonexecutable is upgraded.

Next: Application Setup, Previous: Setting Up the Daemon, Up: Installation [Contents][Index]

2.3 Invoking guix-daemon

The guix-daemon program implements all the functionality to

access the store. This includes launching build processes, running the

garbage collector, querying the availability of a build result, etc. It

is normally run as root like this:

# guix-daemon --build-users-group=guixbuild

This daemon can also be started following the systemd “socket

activation” protocol (see make-systemd-constructor in The GNU Shepherd Manual).

For details on how to set it up, see Setting Up the Daemon.

By default, guix-daemon launches build processes under

different UIDs, taken from the build group specified with

--build-users-group. In addition, each build process is run in a

chroot environment that only contains the subset of the store that the

build process depends on, as specified by its derivation

(see derivation), plus a set of specific

system directories. By default, the latter contains /dev and

/dev/pts. Furthermore, on GNU/Linux, the build environment is a

container: in addition to having its own file system tree, it has

a separate mount name space, its own PID name space, network name space,

etc. This helps achieve reproducible builds (see Features).

When the daemon performs a build on behalf of the user, it creates a

build directory under /tmp or under the directory specified by

its TMPDIR environment variable. This directory is shared with

the container for the duration of the build, though within the container,

the build tree is always called /tmp/guix-build-name.drv-0.

The build directory is automatically deleted upon completion, unless the build failed and the client specified --keep-failed (see --keep-failed).

The daemon listens for connections and spawns one sub-process for each session

started by a client (one of the guix sub-commands). The

guix processes command allows you to get an overview of the activity

on your system by viewing each of the active sessions and clients.

See Invoking guix processes, for more information.

The following command-line options are supported:

--build-users-group=groupTake users from group to run build processes (see build users).

--no-substitutes¶Do not use substitutes for build products. That is, always build things locally instead of allowing downloads of pre-built binaries (see Substitutes).

When the daemon runs with --no-substitutes, clients can still explicitly enable substitution via the

set-build-optionsremote procedure call (see The Store).--substitute-urls=urlsConsider urls the default whitespace-separated list of substitute source URLs. When this option is omitted, ‘

https://bordeaux.guix.gnu.org https://ci.guix.gnu.org’ is used.This means that substitutes may be downloaded from urls, as long as they are signed by a trusted signature (see Substitutes).

See Getting Substitutes from Other Servers, for more information on how to configure the daemon to get substitutes from other servers.

--no-offloadDo not use offload builds to other machines (see Using the Offload Facility). That is, always build things locally instead of offloading builds to remote machines.

--cache-failuresCache build failures. By default, only successful builds are cached.

When this option is used,

guix gc --list-failurescan be used to query the set of store items marked as failed;guix gc --clear-failuresremoves store items from the set of cached failures. See Invokingguix gc.--cores=n-c nUse n CPU cores to build each derivation;

0means as many as available.The default value is

0, but it may be overridden by clients, such as the --cores option ofguix build(see Invokingguix build).The effect is to define the

NIX_BUILD_CORESenvironment variable in the build process, which can then use it to exploit internal parallelism—for instance, by runningmake -j$NIX_BUILD_CORES.--max-jobs=n-M nAllow at most n build jobs in parallel. The default value is

1. Setting it to0means that no builds will be performed locally; instead, the daemon will offload builds (see Using the Offload Facility), or simply fail.--max-silent-time=secondsWhen the build or substitution process remains silent for more than seconds, terminate it and report a build failure.

The default value is

3600(one hour).The value specified here can be overridden by clients (see --max-silent-time).

--timeout=secondsLikewise, when the build or substitution process lasts for more than seconds, terminate it and report a build failure.

The default value is 24 hours.

The value specified here can be overridden by clients (see --timeout).

--rounds=NBuild each derivation n times in a row, and raise an error if consecutive build results are not bit-for-bit identical. Note that this setting can be overridden by clients such as

guix build(see Invokingguix build).When used in conjunction with --keep-failed, the differing output is kept in the store, under /gnu/store/…-check. This makes it easy to look for differences between the two results.

--debugProduce debugging output.

This is useful to debug daemon start-up issues, but then it may be overridden by clients, for example the --verbosity option of

guix build(see Invokingguix build).--chroot-directory=dirAdd dir to the build chroot.

Doing this may change the result of build processes—for instance if they use optional dependencies found in dir when it is available, and not otherwise. For that reason, it is not recommended to do so. Instead, make sure that each derivation declares all the inputs that it needs.

--disable-chrootDisable chroot builds.

Using this option is not recommended since, again, it would allow build processes to gain access to undeclared dependencies. It is necessary, though, when

guix-daemonis running under an unprivileged user account.--log-compression=typeCompress build logs according to type, one of

gzip,bzip2, ornone.Unless --lose-logs is used, all the build logs are kept in the localstatedir. To save space, the daemon automatically compresses them with gzip by default.

--discover[=yes|no]Whether to discover substitute servers on the local network using mDNS and DNS-SD.

This feature is still experimental. However, here are a few considerations.

- It might be faster/less expensive than fetching from remote servers;

- There are no security risks, only genuine substitutes will be used (see Substitute Authentication);

- An attacker advertising

guix publishon your LAN cannot serve you malicious binaries, but they can learn what software you’re installing; - Servers may serve substitute over HTTP, unencrypted, so anyone on the LAN can see what software you’re installing.

It is also possible to enable or disable substitute server discovery at run-time by running:

herd discover guix-daemon on herd discover guix-daemon off

--disable-deduplication¶Disable automatic file “deduplication” in the store.

By default, files added to the store are automatically “deduplicated”: if a newly added file is identical to another one found in the store, the daemon makes the new file a hard link to the other file. This can noticeably reduce disk usage, at the expense of slightly increased input/output load at the end of a build process. This option disables this optimization.

--gc-keep-outputs[=yes|no]Tell whether the garbage collector (GC) must keep outputs of live derivations.

When set to

yes, the GC will keep the outputs of any live derivation available in the store—the .drv files. The default isno, meaning that derivation outputs are kept only if they are reachable from a GC root. See Invokingguix gc, for more on GC roots.--gc-keep-derivations[=yes|no]Tell whether the garbage collector (GC) must keep derivations corresponding to live outputs.

When set to

yes, as is the case by default, the GC keeps derivations—i.e., .drv files—as long as at least one of their outputs is live. This allows users to keep track of the origins of items in their store. Setting it tonosaves a bit of disk space.In this way, setting --gc-keep-derivations to

yescauses liveness to flow from outputs to derivations, and setting --gc-keep-outputs toyescauses liveness to flow from derivations to outputs. When both are set toyes, the effect is to keep all the build prerequisites (the sources, compiler, libraries, and other build-time tools) of live objects in the store, regardless of whether these prerequisites are reachable from a GC root. This is convenient for developers since it saves rebuilds or downloads.--impersonate-linux-2.6On Linux-based systems, impersonate Linux 2.6. This means that the kernel’s

unamesystem call will report 2.6 as the release number.This might be helpful to build programs that (usually wrongfully) depend on the kernel version number.

--lose-logsDo not keep build logs. By default they are kept under localstatedir/guix/log.

--system=systemAssume system as the current system type. By default it is the architecture/kernel pair found at configure time, such as

x86_64-linux.--listen=endpointListen for connections on endpoint. endpoint is interpreted as the file name of a Unix-domain socket if it starts with

/(slash sign). Otherwise, endpoint is interpreted as a host name or host name and port to listen to. Here are a few examples:--listen=/gnu/var/daemonListen for connections on the /gnu/var/daemon Unix-domain socket, creating it if needed.

--listen=localhost¶-

Listen for TCP connections on the network interface corresponding to

localhost, on port 44146. --listen=128.0.0.42:1234Listen for TCP connections on the network interface corresponding to

128.0.0.42, on port 1234.

This option can be repeated multiple times, in which case

guix-daemonaccepts connections on all the specified endpoints. Users can tell client commands what endpoint to connect to by setting theGUIX_DAEMON_SOCKETenvironment variable (seeGUIX_DAEMON_SOCKET).Note: The daemon protocol is unauthenticated and unencrypted. Using --listen=host is suitable on local networks, such as clusters, where only trusted nodes may connect to the build daemon. In other cases where remote access to the daemon is needed, we recommend using Unix-domain sockets along with SSH.

When --listen is omitted,

guix-daemonlistens for connections on the Unix-domain socket located at localstatedir/guix/daemon-socket/socket.

Next: Upgrading Guix, Previous: Invoking guix-daemon, Up: Installation [Contents][Index]

2.4 Application Setup

When using Guix on top of GNU/Linux distribution other than Guix System—a so-called foreign distro—a few additional steps are needed to get everything in place. Here are some of them.

2.4.1 Locales

Packages installed via Guix will not use the locale data of the

host system. Instead, you must first install one of the locale packages

available with Guix and then define the GUIX_LOCPATH environment

variable:

$ guix install glibc-locales $ export GUIX_LOCPATH=$HOME/.guix-profile/lib/locale

Note that the glibc-locales package contains data for all the

locales supported by the GNU libc and weighs in at around

930 MiB9. If you only need a few locales, you can define your custom

locales package via the make-glibc-utf8-locales procedure from

the (gnu packages base) module. The following example defines a

package containing the various Canadian UTF-8 locales known to the

GNU libc, that weighs around 14 MiB:

(use-modules (gnu packages base)) (define my-glibc-locales (make-glibc-utf8-locales glibc #:locales (list "en_CA" "fr_CA" "ik_CA" "iu_CA" "shs_CA") #:name "glibc-canadian-utf8-locales"))

The GUIX_LOCPATH variable plays a role similar to LOCPATH

(see LOCPATH in The GNU C Library Reference

Manual). There are two important differences though:

-

GUIX_LOCPATHis honored only by the libc in Guix, and not by the libc provided by foreign distros. Thus, usingGUIX_LOCPATHallows you to make sure the programs of the foreign distro will not end up loading incompatible locale data. - libc suffixes each entry of

GUIX_LOCPATHwith/X.Y, whereX.Yis the libc version—e.g.,2.22. This means that, should your Guix profile contain a mixture of programs linked against different libc version, each libc version will only try to load locale data in the right format.

This is important because the locale data format used by different libc versions may be incompatible.

2.4.2 Name Service Switch

When using Guix on a foreign distro, we strongly recommend that

the system run the GNU C library’s name service cache daemon,

nscd, which should be listening on the

/var/run/nscd/socket socket. Failing to do that, applications

installed with Guix may fail to look up host names or user accounts, or

may even crash. The next paragraphs explain why.

The GNU C library implements a name service switch (NSS), which is an extensible mechanism for “name lookups” in general: host name resolution, user accounts, and more (see Name Service Switch in The GNU C Library Reference Manual).

Being extensible, the NSS supports plugins, which provide new name

lookup implementations: for example, the nss-mdns plugin allow

resolution of .local host names, the nis plugin allows

user account lookup using the Network information service (NIS), and so

on. These extra “lookup services” are configured system-wide in

/etc/nsswitch.conf, and all the programs running on the system

honor those settings (see NSS Configuration File in The GNU C

Reference Manual).

When they perform a name lookup—for instance by calling the

getaddrinfo function in C—applications first try to connect to

the nscd; on success, nscd performs name lookups on their behalf. If

the nscd is not running, then they perform the name lookup by

themselves, by loading the name lookup services into their own address

space and running it. These name lookup services—the

libnss_*.so files—are dlopen’d, but they may come from

the host system’s C library, rather than from the C library the

application is linked against (the C library coming from Guix).

And this is where the problem is: if your application is linked against

Guix’s C library (say, glibc 2.24) and tries to load NSS plugins from

another C library (say, libnss_mdns.so for glibc 2.22), it will

likely crash or have its name lookups fail unexpectedly.

Running nscd on the system, among other advantages, eliminates

this binary incompatibility problem because those libnss_*.so

files are loaded in the nscd process, not in applications

themselves.

Note that nscd is no longer provided on some GNU/Linux

distros, such as Arch Linux (as of Dec. 2024). nsncd can be

used as a drop-in-replacement. See

the nsncd repository and

this blog post for

more information.

2.4.3 X11 Fonts

The majority of graphical applications use Fontconfig to locate and load

fonts and perform X11-client-side rendering. The fontconfig

package in Guix looks for fonts in $HOME/.guix-profile by

default. Thus, to allow graphical applications installed with Guix to

display fonts, you have to install fonts with Guix as well. Essential

font packages include font-ghostscript, font-dejavu, and

font-gnu-freefont.

Once you have installed or removed fonts, or when you notice an application that does not find fonts, you may need to install Fontconfig and to force an update of its font cache by running:

guix install fontconfig fc-cache -rv

To display text written in Chinese languages, Japanese, or Korean in

graphical applications, consider installing

font-adobe-source-han-sans or font-wqy-zenhei. The former

has multiple outputs, one per language family (see Packages with Multiple Outputs). For instance, the following command installs fonts

for Chinese languages:

guix install font-adobe-source-han-sans:cn

Older programs such as xterm do not use Fontconfig and instead

rely on server-side font rendering. Such programs require to specify a

full name of a font using XLFD (X Logical Font Description), like this:

-*-dejavu sans-medium-r-normal-*-*-100-*-*-*-*-*-1

To be able to use such full names for the TrueType fonts installed in your Guix profile, you need to extend the font path of the X server:

xset +fp $(dirname $(readlink -f ~/.guix-profile/share/fonts/truetype/fonts.dir))

After that, you can run xlsfonts (from xlsfonts package)

to make sure your TrueType fonts are listed there.

2.4.4 X.509 Certificates

The nss-certs package provides X.509 certificates, which allow

programs to authenticate Web servers accessed over HTTPS.

When using Guix on a foreign distro, you can install this package and define the relevant environment variables so that packages know where to look for certificates. See X.509 Certificates, for detailed information.

2.4.5 Emacs Packages

When you install Emacs packages with Guix, the Elisp files are placed

under the share/emacs/site-lisp/ directory of the profile in

which they are installed. The Elisp libraries are made available to

Emacs through the EMACSLOADPATH environment variable, which is

set when installing Emacs itself.

Additionally, autoload definitions are automatically evaluated at the

initialization of Emacs, by the Guix-specific

guix-emacs-autoload-packages procedure. This procedure can be

interactively invoked to have newly installed Emacs packages discovered,

without having to restart Emacs. If, for some reason, you want to avoid

auto-loading the Emacs packages installed with Guix, you can do so by

running Emacs with the --no-site-file option (see Init

File in The GNU Emacs Manual).

Note: Most Emacs variants are now capable of doing native compilation. The approach taken by Guix Emacs however differs greatly from the approach taken upstream.

Upstream Emacs compiles packages just-in-time and typically places shared object files in a special folder within your

user-emacs-directory. These shared objects within said folder are organized in a flat hierarchy, and their file names contain two hashes to verify the original file name and contents of the source code.Guix Emacs on the other hand prefers to compile packages ahead-of-time. Shared objects retain much of the original file name and no hashes are added to verify the original file name or the contents of the file. Crucially, this allows Guix Emacs and packages built against it to be grafted (see grafts), but at the same time, Guix Emacs lacks the hash-based verification of source code baked into upstream Emacs. As this naming schema is trivial to exploit, we disable just-in-time compilation.

Further note, that

emacs-minimal—the default Emacs for building packages—has been configured without native compilation. To natively compile your emacs packages ahead of time, use a transformation like --with-input=emacs-minimal=emacs.

Previous: Application Setup, Up: Installation [Contents][Index]

2.5 Upgrading Guix

To upgrade Guix, run:

guix pull

See Invoking guix pull, for more information.

On a foreign distro, you can upgrade the build daemon by running:

sudo -i guix pull

followed by (assuming your distro uses the systemd service management tool):

systemctl restart guix-daemon.service

On Guix System, upgrading the daemon is achieved by reconfiguring the

system (see guix system reconfigure).

Next: Getting Started, Previous: Installation, Up: GNU Guix [Contents][Index]

3 System Installation

This section explains how to install Guix System on a machine. Guix, as a package manager, can also be installed on top of a running GNU/Linux system, see Installation.

- Limitations

- Hardware Considerations

- USB Stick and DVD Installation

- Preparing for Installation

- Guided Graphical Installation

- Manual Installation

- After System Installation

- Installing Guix in a Virtual Machine

- Building the Installation Image

- Building the Installation Image for ARM Boards

Next: Hardware Considerations, Up: System Installation [Contents][Index]

3.1 Limitations

We consider Guix System to be ready for a wide range of “desktop” and server use cases. The reliability guarantees it provides—transactional upgrades and rollbacks, reproducibility—make it a solid foundation.

More and more system services are provided (see Services).

Nevertheless, before you proceed with the installation, be aware that some services you rely on may still be missing from version ce086e3.

More than a disclaimer, this is an invitation to report issues (and success stories!), and to join us in improving it. See Contributing, for more info.

Next: USB Stick and DVD Installation, Previous: Limitations, Up: System Installation [Contents][Index]

3.2 Hardware Considerations

GNU Guix focuses on respecting the user’s computing freedom. It builds around the kernel Linux-libre, which means that only hardware for which free software drivers and firmware exist is supported. Nowadays, a wide range of off-the-shelf hardware is supported on GNU/Linux-libre—from keyboards to graphics cards to scanners and Ethernet controllers. Unfortunately, there are still areas where hardware vendors deny users control over their own computing, and such hardware is not supported on Guix System.

One of the main areas where free drivers or firmware are lacking is WiFi

devices. WiFi devices known to work include those using Atheros chips

(AR9271 and AR7010), which corresponds to the ath9k Linux-libre

driver, and those using Broadcom/AirForce chips (BCM43xx with

Wireless-Core Revision 5), which corresponds to the b43-open

Linux-libre driver. Free firmware exists for both and is available

out-of-the-box on Guix System, as part of %base-firmware

(see firmware).

The installer warns you early on if it detects devices that are known not to work due to the lack of free firmware or free drivers.

The Free Software Foundation runs Respects Your Freedom (RYF), a certification program for hardware products that respect your freedom and your privacy and ensure that you have control over your device. We encourage you to check the list of RYF-certified devices.

Another useful resource is the H-Node web site. It contains a catalog of hardware devices with information about their support in GNU/Linux.

Next: Preparing for Installation, Previous: Hardware Considerations, Up: System Installation [Contents][Index]

3.3 USB Stick and DVD Installation

An ISO-9660 installation image that can be written to a USB stick or

burnt to a DVD can be downloaded from

‘https://ftp.gnu.org/gnu/guix/guix-system-install-ce086e3.x86_64-linux.iso’,

where you can replace x86_64-linux with one of:

x86_64-linuxfor a GNU/Linux system on Intel/AMD-compatible 64-bit CPUs;

i686-linuxfor a 32-bit GNU/Linux system on Intel-compatible CPUs.

Make sure to download the associated .sig file and to verify the authenticity of the image against it, along these lines:

$ wget https://ftp.gnu.org/gnu/guix/guix-system-install-ce086e3.x86_64-linux.iso.sig $ gpg --verify guix-system-install-ce086e3.x86_64-linux.iso.sig

If that command fails because you do not have the required public key, then run this command to import it:

$ wget https://sv.gnu.org/people/viewgpg.php?user_id=15145 \

-qO - | gpg --import -

and rerun the gpg --verify command.

Take note that a warning like “This key is not certified with a trusted signature!” is normal.

This image contains the tools necessary for an installation. It is meant to be copied as is to a large-enough USB stick or DVD.

Copying to a USB Stick

Insert a USB stick of 1 GiB or more into your machine, and determine its device name. Assuming that the USB stick is known as /dev/sdX, copy the image with:

dd if=guix-system-install-ce086e3.x86_64-linux.iso of=/dev/sdX status=progress sync

Access to /dev/sdX usually requires root privileges.

Burning on a DVD

Insert a blank DVD into your machine, and determine its device name. Assuming that the DVD drive is known as /dev/srX, copy the image with:

growisofs -dvd-compat -Z /dev/srX=guix-system-install-ce086e3.x86_64-linux.iso

Access to /dev/srX usually requires root privileges.

Booting

Once this is done, you should be able to reboot the system and boot from

the USB stick or DVD. The latter usually requires you to get in the

BIOS or UEFI boot menu, where you can choose to boot from the USB stick.

In order to boot from Libreboot, switch to the command mode by pressing

the c key and type search_grub usb.

Sadly, on some machines, the installation medium cannot be properly booted and you only see a black screen after booting even after you waited for ten minutes. This may indicate that your machine cannot run Guix System; perhaps you instead want to install Guix on a foreign distro (see Binary Installation). But don’t give up just yet; a possible workaround is pressing the e key in the GRUB boot menu and appending nomodeset to the Linux bootline. Sometimes the black screen issue can also be resolved by connecting a different display.

See Installing Guix in a Virtual Machine, if, instead, you would like to install Guix System in a virtual machine (VM).

Next: Guided Graphical Installation, Previous: USB Stick and DVD Installation, Up: System Installation [Contents][Index]

3.4 Preparing for Installation

Once you have booted, you can use the guided graphical installer, which makes it easy to get started (see Guided Graphical Installation). Alternatively, if you are already familiar with GNU/Linux and if you want more control than what the graphical installer provides, you can choose the “manual” installation process (see Manual Installation).

The graphical installer is available on TTY1. You can obtain root shells on TTYs 3 to 6 by hitting ctrl-alt-f3, ctrl-alt-f4, etc. TTY2 shows this documentation and you can reach it with ctrl-alt-f2. Documentation is browsable using the Info reader commands (see Stand-alone GNU Info). The installation system runs the GPM mouse daemon, which allows you to select text with the left mouse button and to paste it with the middle button.

Note: Installation requires access to the Internet so that any missing dependencies of your system configuration can be downloaded. See the “Networking” section below.

Next: Manual Installation, Previous: Preparing for Installation, Up: System Installation [Contents][Index]

3.5 Guided Graphical Installation

The graphical installer is a text-based user interface. It will guide you, with dialog boxes, through the steps needed to install GNU Guix System.

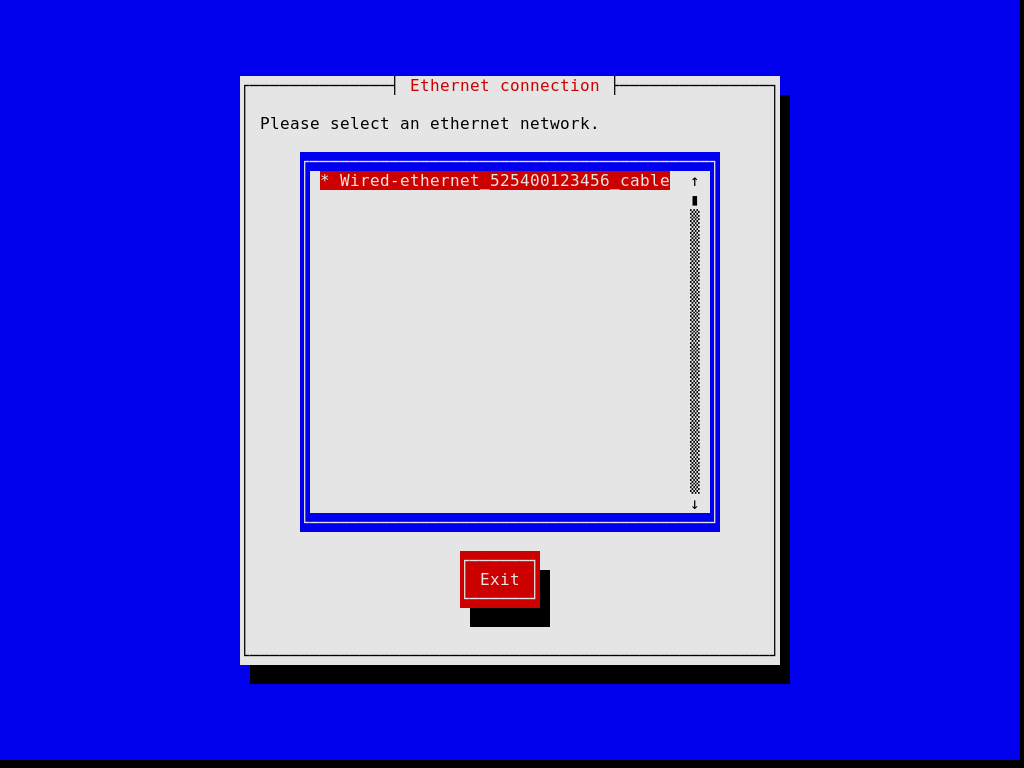

The first dialog boxes allow you to set up the system as you use it during the installation: you can choose the language, keyboard layout, and set up networking, which will be used during the installation. The image below shows the networking dialog.

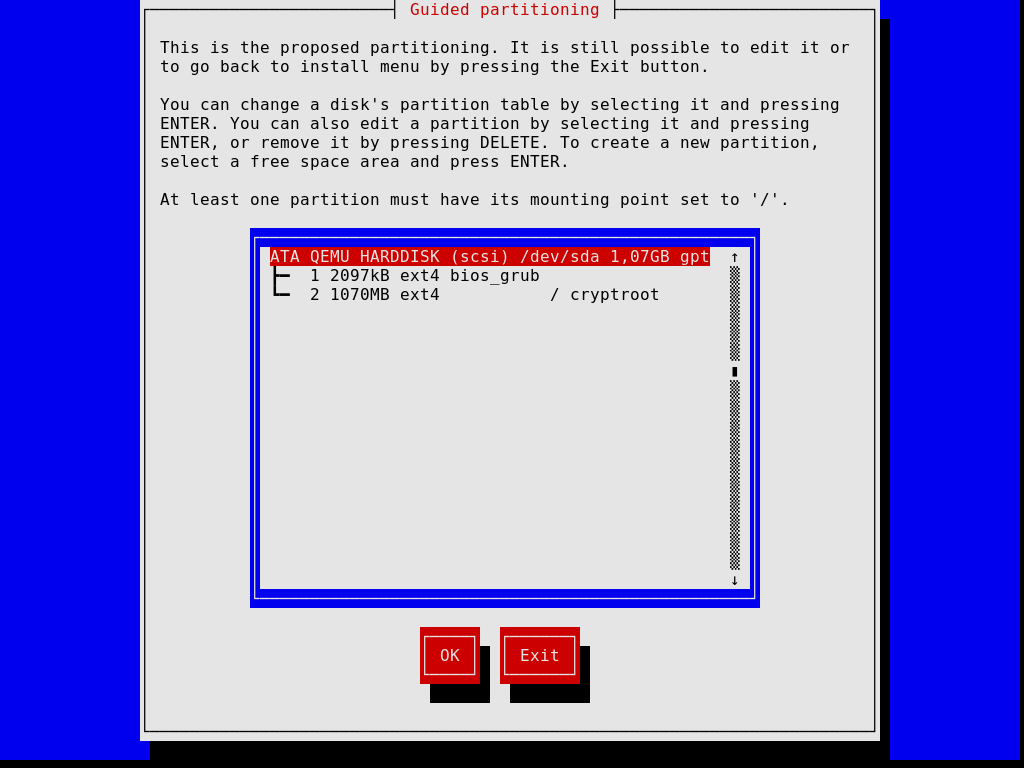

Later steps allow you to partition your hard disk, as shown in the image below, to choose whether or not to use encrypted file systems, to enter the host name and root password, and to create an additional account, among other things.

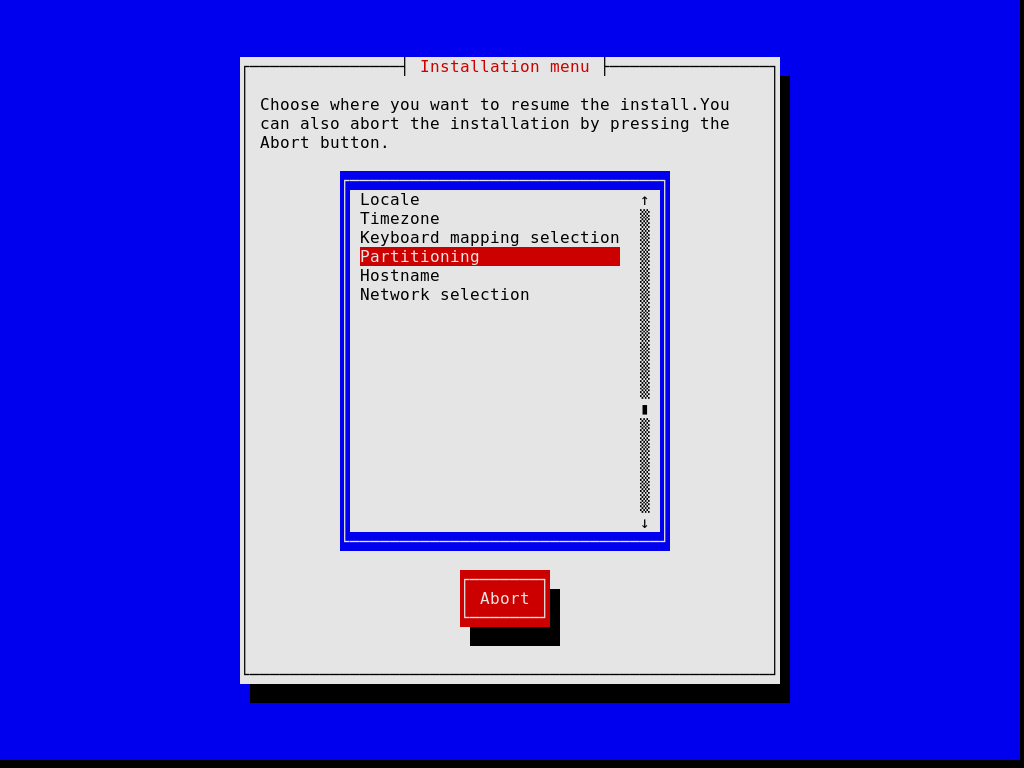

Note that, at any time, the installer allows you to exit the current installation step and resume at a previous step, as show in the image below.

Once you’re done, the installer produces an operating system configuration and displays it (see Using the Configuration System). At that point you can hit “OK” and installation will proceed. On success, you can reboot into the new system and enjoy. See After System Installation, for what’s next!

Next: After System Installation, Previous: Guided Graphical Installation, Up: System Installation [Contents][Index]

3.6 Manual Installation

This section describes how you would “manually” install GNU Guix System on your machine. This option requires familiarity with GNU/Linux, with the shell, and with common administration tools. If you think this is not for you, consider using the guided graphical installer (see Guided Graphical Installation).

The installation system provides root shells on TTYs 3 to 6; press

ctrl-alt-f3, ctrl-alt-f4, and so on to reach them. It includes

many common tools needed to install the system, but is also a full-blown

Guix System. This means that you can install additional packages, should you

need it, using guix package (see Invoking guix package).

Next: Proceeding with the Installation, Up: Manual Installation [Contents][Index]

3.6.1 Keyboard Layout, Networking, and Partitioning

Before you can install the system, you may want to adjust the keyboard layout, set up networking, and partition your target hard disk. This section will guide you through this.

3.6.1.1 Keyboard Layout

The installation image uses the US qwerty keyboard layout. If you want

to change it, you can use the loadkeys command. For example,

the following command selects the Dvorak keyboard layout:

loadkeys dvorak

See the files under /run/current-system/profile/share/keymaps for

a list of available keyboard layouts. Run man loadkeys for

more information.

3.6.1.2 Networking

Run the following command to see what your network interfaces are called:

ifconfig -a

… or, using the GNU/Linux-specific ip command:

ip address

Wired interfaces have a name starting with ‘e’; for example, the interface corresponding to the first on-board Ethernet controller is called ‘eno1’. Wireless interfaces have a name starting with ‘w’, like ‘w1p2s0’.

- Wired connection

To configure a wired network run the following command, substituting interface with the name of the wired interface you want to use.

ifconfig interface up

… or, using the GNU/Linux-specific

ipcommand:ip link set interface up

- Wireless connection ¶

-

To configure wireless networking, you can create a configuration file for the

wpa_supplicantconfiguration tool (its location is not important) using one of the available text editors such asnano:nano wpa_supplicant.conf

As an example, the following stanza can go to this file and will work for many wireless networks, provided you give the actual SSID and passphrase for the network you are connecting to:

network={ ssid="my-ssid" key_mgmt=WPA-PSK psk="the network's secret passphrase" }Start the wireless service and run it in the background with the following command (substitute interface with the name of the network interface you want to use):

wpa_supplicant -c wpa_supplicant.conf -i interface -B

Run

man wpa_supplicantfor more information.

At this point, you need to acquire an IP address. On a network where IP addresses are automatically assigned via DHCP, you can run:

dhclient -v interface

Try to ping a server to see if networking is up and running:

ping -c 3 gnu.org

Setting up network access is almost always a requirement because the image does not contain all the software and tools that may be needed.

If you need HTTP and HTTPS access to go through a proxy, run the following command:

herd set-http-proxy guix-daemon URL

where URL is the proxy URL, for example

http://example.org:8118.

If you want to, you can continue the installation remotely by starting an SSH server:

herd start ssh-daemon

Make sure to either set a password with passwd, or configure

OpenSSH public key authentication before logging in.

3.6.1.3 Disk Partitioning

Unless this has already been done, the next step is to partition, and then format the target partition(s).

The installation image includes several partitioning tools, including

Parted (see Overview in GNU Parted User Manual),

fdisk, and cfdisk. Run it and set up your disk with

the partition layout you want:

cfdisk

If your disk uses the GUID Partition Table (GPT) format and you plan to install BIOS-based GRUB (which is the default), make sure a BIOS Boot Partition is available (see BIOS installation in GNU GRUB manual).

If you instead wish to use EFI-based GRUB, a FAT32 EFI System Partition

(ESP) is required. This partition can be mounted at /boot/efi for

instance and must have the esp flag set. E.g., for parted:

parted /dev/sda set 1 esp on

Note: Unsure whether to use EFI- or BIOS-based GRUB? If the directory /sys/firmware/efi exists in the installation image, then you should probably perform an EFI installation, using

grub-efi-bootloader. Otherwise you should use the BIOS-based GRUB, known asgrub-bootloader. See Bootloader Configuration, for more info on bootloaders.

Once you are done partitioning the target hard disk drive, you have to create a file system on the relevant partition(s)10. For the ESP, if you have one and assuming it is /dev/sda1, run:

mkfs.fat -F32 /dev/sda1

For the root file system, ext4 is the most widely used format. Other file systems, such as Btrfs, support compression, which is reported to nicely complement file deduplication that the daemon performs independently of the file system (see deduplication).

Preferably, assign file systems a label so that you can easily and

reliably refer to them in file-system declarations (see File Systems). This is typically done using the -L option of

mkfs.ext4 and related commands. So, assuming the target root

partition lives at /dev/sda2, a file system with the label

my-root can be created with:

mkfs.ext4 -L my-root /dev/sda2

If you are instead planning to encrypt the root partition, you can use

the Cryptsetup/LUKS utilities to do that (see man cryptsetup for more information).

Assuming you want to store the root partition on /dev/sda2, the command sequence to format it as a LUKS partition would be along these lines:

cryptsetup luksFormat /dev/sda2 cryptsetup open /dev/sda2 my-partition mkfs.ext4 -L my-root /dev/mapper/my-partition

Once that is done, mount the target file system under /mnt

with a command like (again, assuming my-root is the label of the

root file system):

mount LABEL=my-root /mnt

Also mount any other file systems you would like to use on the target

system relative to this path. If you have opted for /boot/efi as an

EFI mount point for example, mount it at /mnt/boot/efi now so it is

found by guix system init afterwards.

Finally, if you plan to use one or more swap partitions (see Swap Space), make sure to initialize them with mkswap. Assuming

you have one swap partition on /dev/sda3, you would run:

mkswap /dev/sda3 swapon /dev/sda3

Alternatively, you may use a swap file. For example, assuming that in the new system you want to use the file /swapfile as a swap file, you would run11:

# This is 10 GiB of swap space. Adjust "count" to change the size. dd if=/dev/zero of=/mnt/swapfile bs=1MiB count=10240 # For security, make the file readable and writable only by root. chmod 600 /mnt/swapfile mkswap /mnt/swapfile swapon /mnt/swapfile

Note that if you have encrypted the root partition and created a swap file in its file system as described above, then the encryption also protects the swap file, just like any other file in that file system.

Previous: Keyboard Layout, Networking, and Partitioning, Up: Manual Installation [Contents][Index]

3.6.2 Proceeding with the Installation

With the target partitions ready and the target root mounted on /mnt, we’re ready to go. First, run:

herd start cow-store /mnt

This makes /gnu/store copy-on-write, such that packages added to it

during the installation phase are written to the target disk on /mnt

rather than kept in memory. This is necessary because the first phase of

the guix system init command (see below) entails downloads or

builds to /gnu/store which, initially, is an in-memory file system.

Next, you have to edit a file and

provide the declaration of the operating system to be installed. To

that end, the installation system comes with three text editors. We

recommend GNU nano (see GNU nano Manual), which

supports syntax highlighting and parentheses matching; other editors

include mg (an Emacs clone), and

nvi (a clone of the original BSD vi editor).

We strongly recommend storing that file on the target root file system, say,

as /mnt/etc/config.scm. Failing to do that, you will have lost your

configuration file once you have rebooted into the newly-installed system.

See Using the Configuration System, for an overview of the configuration file. The example configurations discussed in that section are available under /etc/configuration in the installation image. Thus, to get started with a system configuration providing a graphical display server (a “desktop” system), you can run something along these lines:

# mkdir /mnt/etc # cp /etc/configuration/desktop.scm /mnt/etc/config.scm # nano /mnt/etc/config.scm

You should pay attention to what your configuration file contains, and in particular:

- Make sure the

bootloader-configurationform refers to the targets you want to install GRUB on. It should mentiongrub-bootloaderif you are installing GRUB in the legacy way, orgrub-efi-bootloaderfor newer UEFI systems. For legacy systems, thetargetsfield contain the names of the devices, like(list "/dev/sda"); for UEFI systems it names the paths to mounted EFI partitions, like(list "/boot/efi"); do make sure the paths are currently mounted and afile-systementry is specified in your configuration. - Be sure that your file system labels match the value of their respective

devicefields in yourfile-systemconfiguration, assuming yourfile-systemconfiguration uses thefile-system-labelprocedure in itsdevicefield. - If there are encrypted or RAID partitions, make sure to add a

mapped-devicesfield to describe them (see Mapped Devices).

Once you are done preparing the configuration file, the new system must be initialized (remember that the target root file system is mounted under /mnt):

guix system init /mnt/etc/config.scm /mnt

This copies all the necessary files and installs GRUB on

/dev/sdX, unless you pass the --no-bootloader option. For

more information, see Invoking guix system. This command may trigger

downloads or builds of missing packages, which can take some time.

Once that command has completed—and hopefully succeeded!—you can run

reboot and boot into the new system. The root password

in the new system is initially empty; other users’ passwords need to be

initialized by running the passwd command as root,

unless your configuration specifies otherwise

(see user account passwords).

See After System Installation, for what’s next!

Next: Installing Guix in a Virtual Machine, Previous: Manual Installation, Up: System Installation [Contents][Index]

3.7 After System Installation

Success, you’ve now booted into Guix System! Login to the system using

the non-root user that you created during installation. You can

upgrade the system whenever you want by running:

guix pull sudo guix system reconfigure /etc/config.scm

This builds a new system generation with the latest packages and services.

Now, see Getting Started, and

join us on #guix on the Libera.Chat IRC network or on

guix-devel@gnu.org to share your experience!

Next: Building the Installation Image, Previous: After System Installation, Up: System Installation [Contents][Index]

3.8 Installing Guix in a Virtual Machine

If you’d like to install Guix System in a virtual machine (VM) or on a virtual private server (VPS) rather than on your beloved machine, this section is for you.

To boot a QEMU VM for installing Guix System in a disk image, follow these steps:

- First, retrieve and decompress the Guix system installation image as described previously (see USB Stick and DVD Installation).

- Create a disk image that will hold the installed system. To make a

qcow2-formatted disk image, use the

qemu-imgcommand:qemu-img create -f qcow2 guix-system.img 50G

The resulting file will be much smaller than 50 GB (typically less than 1 MB), but it will grow as the virtualized storage device is filled up.

- Boot the USB installation image in a VM:

qemu-system-x86_64 -m 1024 -smp 1 -enable-kvm \ -nic user,model=virtio-net-pci -boot menu=on,order=d \ -drive file=guix-system.img \ -drive media=cdrom,readonly=on,file=guix-system-install-ce086e3.system.iso

-enable-kvmis optional, but significantly improves performance, see Running Guix in a Virtual Machine. - You’re now root in the VM, proceed with the installation process. See Preparing for Installation, and follow the instructions.

Once installation is complete, you can boot the system that’s on your guix-system.img image. See Running Guix in a Virtual Machine, for how to do that.

Previous: Installing Guix in a Virtual Machine, Up: System Installation [Contents][Index]

3.9 Building the Installation Image

The installation image described above was built using the guix

system command, specifically:

guix system image -t iso9660 gnu/system/install.scm

Have a look at gnu/system/install.scm in the source tree,

and see also Invoking guix system for more information

about the installation image.

3.10 Building the Installation Image for ARM Boards

Many ARM boards require a specific variant of the U-Boot bootloader.

If you build a disk image and the bootloader is not available otherwise (on another boot drive etc), it’s advisable to build an image that includes the bootloader, specifically:

guix system image --system=armhf-linux -e '((@ (gnu system install) os-with-u-boot) (@ (gnu system install) installation-os) "A20-OLinuXino-Lime2")'

A20-OLinuXino-Lime2 is the name of the board. If you specify an invalid

board, a list of possible boards will be printed.

Next: Package Management, Previous: System Installation, Up: GNU Guix [Contents][Index]